AI Advertising Enters Its Signal Era

The Agentic Ad Revolution: Why Three New Protocols Are About to Rewrite the Open Web

AI is no longer an emerging capability in advertising. It is becoming the default decision layer.

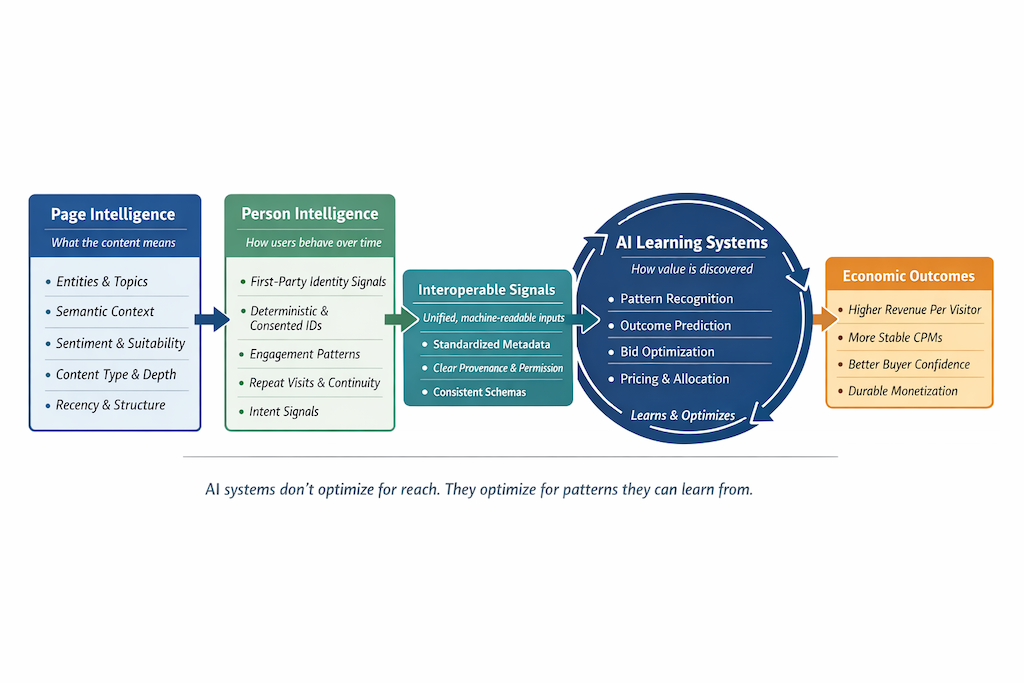

As AI systems increasingly shape discovery, media buying, and optimization, the source of competitive advantage has quietly shifted. The question is no longer who has the most advanced model or the most automation. It is who supplies the most interpretable, interoperable, and trusted signals into those systems.

This matters because AI does not invent value on its own. It reallocates value based on the inputs it can understand, compare, and learn from over time. By 2026, the publishers and platforms that control those inputs will capture a disproportionate share of economic upside, even as overall traffic becomes harder to sustain.

The shift does not arrive as a single disruption. It shows up gradually, impression by impression, as AI systems learn where outcomes actually come from.

From Containers to Meaning: The Evolution of Page Intelligence

For most of digital advertising’s history, context functioned as a proxy for placement. An ad appeared on a sports page, or a finance page, or a page deemed “safe.”

That logic was sufficient when buying decisions were human-driven and targeting signals were coarse. AI systems require something fundamentally different. They need to understand the meaning. Not just which words appear on a page, but what the content represents, why the user is there, and what mindset that moment reflects. This marks the transition from contextual containers to page intelligence.

Page intelligence serves two audiences at once. The same machine-readable structure that helps AI agents understand content for discovery also improves contextual precision in the bidstream. What makes content legible to AI crawlers also makes inventory more intelligible to AI buying systems.

Crucially, this intelligence does not require publishers to build proprietary models. It emerges from structure: clear HTML hierarchy, consistent headings, standardized Schema markup, and automated classifiers aligned to clear taxonomies. These elements allow machines to identify entities, authority, content type, and intent without ambiguity. AI does not need novelty here. It needs consistency.

The Revenue Reset: From Volume to Value

AI-driven search and answer interfaces increasingly resolve intent without referral clicks. Independent analyses show that users are significantly less likely to click through to publisher sites when AI-generated summaries appear, with traditional organic click-through rates falling sharply in affected queries.

The economics are hard to ignore. Fewer clicks mean fewer visits, fewer impressions, and fewer opportunities to monetize volume. For publishers built on scale economics, this feels existential. But what is unfolding is not simply a traffic problem. It is a reallocation of value.

As top-of-funnel volume compresses, revenue concentrates downstream. Publishers that convert remaining attention into repeat usage, engagement, and known relationships are seeing materially different outcomes than those that continue to optimize for reach alone.

Research into engagement economics shows that organizations prioritizing engagement have grown revenue even as overall traffic declines. The gap between casual and committed audiences is dramatic. Highly engaged users generate orders of magnitude more revenue than one-off visitors.

In an AI-driven market, yield follows clarity. The better a system can understand who the user is, what they are doing, and where the impression sits in a decision journey, the more confidently it prices that opportunity.

The Evolution of Person-Level Intelligence

Page intelligence explains where an impression occurs and what it represents. Person intelligence explains who is behind that impression and how they behave over time.

Historically, person-level understanding in advertising was dominated by third-party identifiers and probabilistic inference. These approaches were optimized for reach and scale, but they were brittle. They decayed quickly, struggled across devices, and provided noisy inputs for learning systems.

AI changes the requirements. AI systems do not simply activate against identity. They learn from behavior. They look for repeatability, consistency, and signal persistence across time and context. This is why first-party data matters differently in an AI market. For AI systems, first-party data is not merely safer or more compliant. It is more learnable.

First-party signals tend to have properties that AI systems value:

- They recur across sessions.

- They align directly to real user behavior.

- They are collected in consistent environments.

This is also why deterministic identity, when available, tends to outperform probabilistic substitutes. It reduces ambiguity. It gives AI systems something solid to anchor learning against.

Person intelligence, in this sense, is not about surveillance or granularity. It is about continuity. The ability to recognize patterns over time without re-guessing who the user might be on every impression.

From Normalization to Interoperability

The signal challenge is often framed as a problem of normalization. That framing is technically correct, but strategically incomplete.

The market is actually converging on interoperability.

AI systems do not require perfectly unified datasets. They require signals that work together. Identity, context, engagement, and quality do not need to live in a single system. They need to be interpretable within a shared frame.

Interoperability is what allows these signals to snap together without bespoke translation. It will enable learning to transfer across buying systems, measurement systems, and curation workflows. Value accrues not to those with the most data, but to those whose signals align cleanly enough for machines to learn from them.

Machine Legibility Beats Custom Intelligence

As AI adoption accelerates, it is tempting to assume that advantage will accrue only to those building bespoke intelligence layers.

In practice, much of the signal surface AI leverages already exists.

Structured data, schema markup, and consistent metadata allow machines to identify entities, authorship, authority, and content type. They distinguish evergreen information from time-sensitive updates. They clarify relationships between topics, products, and concepts.

These mechanisms matter because they reduce ambiguity. They make environments easier for machines to reason about at scale.

AI does not require publishers to invent intelligence from scratch. It requires environments expressed in ways machines can reliably interpret.

Quality Becomes a Learned Signal

As generative tools lower the cost of content creation, the supply of low-quality inventory expands rapidly. AI buying systems respond predictably. They learn which environments produce outcomes and which generate waste.

Over time, signals tied to content depth, engagement consistency, layout stability, repeat user behavior, and low risk of invalid traffic begin to influence pricing. Often this happens without explicit labeling. Systems infer quality by observing results. In an AI-driven market, quality is not asserted. It is learned.

The Quiet Advantage

AI will continue to improve. Automation will continue to expand. Those dynamics are inevitable. What remains unevenly distributed is the ability to consistently supply signals that AI systems can understand, trust, and learn from.

By the end of 2026, publishers will not be differentiated by the amount of inventory they have, but by the meaning, continuity, and permission they can attach to each impression.

That advantage does not announce itself loudly. It appears quietly, impression by impression, as AI systems learn where value lives.

Want to learn more?: Download Our Most Recent Definitive Guide to Agentic Advertising Revolution

If you’re future-proofing your business—whether you’re a publisher, advertiser, or platform—this guide to agentic advertising will help you:

- Understand the technical architecture of UCP, AdCP, and ARTF

- Benchmark where your stack is today—and what to upgrade first

- Design privacy‑safe workflows that outperform legacy approaches

- Prioritize quick wins and map a pragmatic adoption roadmap

Ready to see how it all fits together? Download the white paper now and start building your agentic advantage